I’m very excited to have a stand at the Singapore Mini Maker Faire in July and I’ve been working to tidy up two projects to exhibit. One is a refurbished version of my Beerbot (last seen here). The aim behind Beerbot is to be able fetch a beer from anywhere in the house and bring it to me without the need for me to leave my computer – a noble and selfless goal if ever there was one.

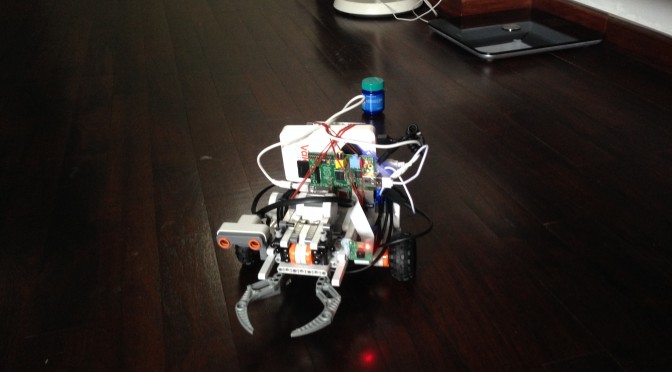

The robot is built around a Lego Mindstorms kit controlled using a Raspberry Pi with the nxt python package. The mindstorms kit controls three servos and reads from an ultrasonic distance sensor for beer rangefinding. Two of the servos drive the left and right hand wheels for the robot while the third controls a claw that can close around a full can of drink and hold it just securely enough to drag it across a smooth floor to its thirsty master.

It’s linked to the Pi via a powered USB hub, which also runs a wifi adaptor. Power comes from a big power bank designed for charging phones with two USB ports. The power bank can give 3A of current which is important as the Pi can behave unpredictably if it doesn’t have enough juice.

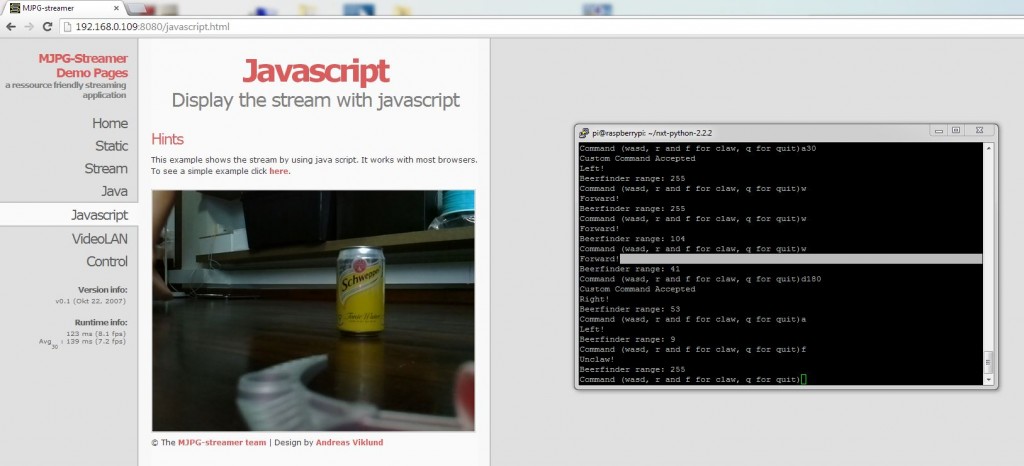

To give the Beerbot vision I’ve attached a Raspberry Pi camera which can stream video to another computer using JacksonLiam’s experimental version of mjpg-streamer with a modification by MacHack. Mjpg-streamer creates a mini web server on the pi which shows the video stream. I’ve spent many frustrating hours trying to get decent and stable frame rates using mjpg-streamer – the version with these modifications works very well and gives a nice smooth stream.

The Beerbot is steered by creating an SSH connection to the Pi and then running a python script that allows instructions to be passed to the mindstorms kit. With the SSH window open on half of the screen and the video stream on the other you can move around and hunt beer with a robot’s-eye view of the world.

The videos below show a test run, one video shows the view on the computer while the other shows what the robot is doing. If you hit play on both videos at once you might be lucky enough to get them in sync (would be nice to find a way to combine the videos into one split screen file). To avoid unnecessarily warming beer I used tonic water for the trial. Unfortunately the screen recorder I used didn’t have very good color depth so it doesn’t do proper justice to the video quality, but you can at least get an idea of the frame rate.

It’s surprisingly difficult to steer the robot remotely. Not having peripheral vision or depth perception makes it harder to know where you are, and since the camera is currently a bit off centre it is difficult to know when the target can is right in front of you. I hope to solve this issue with a laser pointer sight, maybe even one that can be turned off and on remotely with the pi’s outputs. Having an option to ACTIVATE LASER through SSH would be fun. With two lasers you could even get an idea of how far away an object is by putting one beam at an angle and visually checking the distance between the two dots.

I hope to install the lasers before the Maker Faire. I also need to set up a little corral where the robot can roam without people tripping over it and a workstation where people can try out controlling it.

Other modifications for this project that I’d like to try out at some point in rough order of complexity:

- Centre mounted camera and range finder

- Some way of stopping the mindstorms from auto powering off after inactivity, or a way of waking it up from the Pi

- Access to the robot from outside the home network

- A jazzed up wep page for the video stream that better reflects Beerbot’s personality

- A way of controlling the python script from the web page so you can click on forward, left, right arrows etc. instead if using SSH

- Replace the mindstorms elements with an arduino and off the shelf servos

- Replace the Lego structure with a 3D printed chassis

- (The ultimate goal) modify a mini fridge so that it can dispense chilled beer to floor level on command for intercept by the Beerbot

Some problems I grappled with this time

When I do a post like this I am always amazed that it took me so long to get the thing working. I think the reason is that the post rarely reflects the time spent bashing my head against the wall trying to figure out why something isn’t working. For the sake of anyone else who reads this these are some of the problems I worked around this time:

- When I first tried out mjpg-streamer all the images were very dark and tinted green. I solved it by adding “-y YUYV” to the launch line using the guide here. Why does this work? I have no idea.

- The non-experimental version of mjpg-streamer kept giving messages to the console saying “mmal: Skipping frame XXX to restart at frame XXX”. This stopped me from putting mjpg-streamer in the background so I couldn’t run the python script to drive the robot. I saw posts saying that this issue was due to setting the framerate too high, but no matter how low I put the framerate I still got this problem. Using the experimental version described above fixed the issue

- Running the mjpg-streamer didn’t work for me the first few times because although the instructions said to type

“./mjpg_streamer -o “output_http.so -w ./www” -i “input_raspicam.so””

I kept typing

“sudo ./mjpg_streamer -o “output_http.so -w ./www” -i “input_raspicam.so””

because I am a linux noob and in my basic understanding of permissions, commands usually don’t work unless you sudo them. In this case adding sudo means that it doesn’t work. Why? It is the mystery of linux.

Presenting @ a Maker Faire? Awesome, can’t wait to see the results. I think that you’re winning at Robot (and probably printer too). I must step up my game.

+1 ACTIVATE LASER button. Yes please.

Mad idea: if you used a laser diode to give you range-finding, why not split the beam by shining the laser through a thin piece of glass at the Brewster Angle? This way you can redirect the reflected beam to give you crossing lasers, with the added advantage that the reflected laser beam will be polarised, which should be useful for some reason.

I vaguely remembered what the Brewster Angle is something to with Physics no?. My current plan to get multiple beams is to buy multiple laser pointers for $3 each. If I polarised them I’d only have a 50% chance of blinding someone wearing sunglasses

Hi John!

I’m Sharanya, a writer for the online tech news portal Vulcan Post. We met on Saturday and I had a great time witnessing the BeerBot in action. Was wondering if we could feature the image of the BeerBot (at the top of this post) on our site, with attribution back here? Do also feel free to email me at sharanyapillai17@gmail.com. Thanks so much!

Hi Sharanya, it was great to meet you on Saturday. Thanks for asking about the photo – I will send you a more up to date picture for the post.